mirror of

https://github.com/facebookresearch/pytorch3d.git

synced 2026-02-26 08:06:00 +08:00

rename master branch to main

Summary: Change doc references to master branch to its new name main. Reviewed By: nikhilaravi Differential Revision: D30303018 fbshipit-source-id: cfdbb207dfe3366de7e0ca759ed56f4b8dd894d1

This commit is contained in:

committed by

Facebook GitHub Bot

Facebook GitHub Bot

parent

103da63393

commit

b0dd0c8821

30

README.md

30

README.md

@@ -1,4 +1,4 @@

|

||||

<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/pytorch3dlogo.png" width="900"/>

|

||||

<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/pytorch3dlogo.png" width="900"/>

|

||||

|

||||

[](https://circleci.com/gh/facebookresearch/pytorch3d)

|

||||

[](https://anaconda.org/pytorch3d/pytorch3d)

|

||||

@@ -35,25 +35,25 @@ PyTorch3D is released under the [BSD License](LICENSE).

|

||||

|

||||

Get started with PyTorch3D by trying one of the tutorial notebooks.

|

||||

|

||||

|<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/dolphin_deform.gif" width="310"/>|<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/bundle_adjust.gif" width="310"/>|

|

||||

|<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/dolphin_deform.gif" width="310"/>|<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/bundle_adjust.gif" width="310"/>|

|

||||

|:-----------------------------------------------------------------------------------------------------------:|:--------------------------------------------------:|

|

||||

| [Deform a sphere mesh to dolphin](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/deform_source_mesh_to_target_mesh.ipynb)| [Bundle adjustment](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/bundle_adjustment.ipynb) |

|

||||

| [Deform a sphere mesh to dolphin](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/deform_source_mesh_to_target_mesh.ipynb)| [Bundle adjustment](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/bundle_adjustment.ipynb) |

|

||||

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/render_textured_mesh.gif" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/camera_position_teapot.gif" width="310" height="310"/>

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/render_textured_mesh.gif" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/camera_position_teapot.gif" width="310" height="310"/>

|

||||

|:------------------------------------------------------------:|:--------------------------------------------------:|

|

||||

| [Render textured meshes](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/render_textured_meshes.ipynb)| [Camera position optimization](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/camera_position_optimization_with_differentiable_rendering.ipynb)|

|

||||

| [Render textured meshes](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/render_textured_meshes.ipynb)| [Camera position optimization](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/camera_position_optimization_with_differentiable_rendering.ipynb)|

|

||||

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/pointcloud_render.png" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/cow_deform.gif" width="310" height="310"/>

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/pointcloud_render.png" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/cow_deform.gif" width="310" height="310"/>

|

||||

|:------------------------------------------------------------:|:--------------------------------------------------:|

|

||||

| [Render textured pointclouds](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/render_colored_points.ipynb)| [Fit a mesh with texture](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/fit_textured_mesh.ipynb)|

|

||||

| [Render textured pointclouds](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/render_colored_points.ipynb)| [Fit a mesh with texture](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/fit_textured_mesh.ipynb)|

|

||||

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/densepose_render.png" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/shapenet_render.png" width="310" height="310"/>

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/densepose_render.png" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/shapenet_render.png" width="310" height="310"/>

|

||||

|:------------------------------------------------------------:|:--------------------------------------------------:|

|

||||

| [Render DensePose data](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/render_densepose.ipynb)| [Load & Render ShapeNet data](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/dataloaders_ShapeNetCore_R2N2.ipynb)|

|

||||

| [Render DensePose data](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/render_densepose.ipynb)| [Load & Render ShapeNet data](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/dataloaders_ShapeNetCore_R2N2.ipynb)|

|

||||

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/fit_textured_volume.gif" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/fit_nerf.gif" width="310" height="310"/>

|

||||

| <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/fit_textured_volume.gif" width="310"/> | <img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/fit_nerf.gif" width="310" height="310"/>

|

||||

|:------------------------------------------------------------:|:--------------------------------------------------:|

|

||||

| [Fit Textured Volume](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/fit_textured_volume.ipynb)| [Fit A Simple Neural Radiance Field](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/fit_simple_neural_radiance_field.ipynb)|

|

||||

| [Fit Textured Volume](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/fit_textured_volume.ipynb)| [Fit A Simple Neural Radiance Field](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/fit_simple_neural_radiance_field.ipynb)|

|

||||

|

||||

|

||||

|

||||

@@ -64,9 +64,9 @@ Learn more about the API by reading the PyTorch3D [documentation](https://pytorc

|

||||

|

||||

We also have deep dive notes on several API components:

|

||||

|

||||

- [Heterogeneous Batching](https://github.com/facebookresearch/pytorch3d/tree/master/docs/notes/batching.md)

|

||||

- [Mesh IO](https://github.com/facebookresearch/pytorch3d/tree/master/docs/notes/meshes_io.md)

|

||||

- [Differentiable Rendering](https://github.com/facebookresearch/pytorch3d/tree/master/docs/notes/renderer_getting_started.md)

|

||||

- [Heterogeneous Batching](https://github.com/facebookresearch/pytorch3d/tree/main/docs/notes/batching.md)

|

||||

- [Mesh IO](https://github.com/facebookresearch/pytorch3d/tree/main/docs/notes/meshes_io.md)

|

||||

- [Differentiable Rendering](https://github.com/facebookresearch/pytorch3d/tree/main/docs/notes/renderer_getting_started.md)

|

||||

|

||||

### Overview Video

|

||||

|

||||

@@ -129,7 +129,7 @@ If you are using the pulsar backend for sphere-rendering (the `PulsarPointRender

|

||||

|

||||

Please see below for a timeline of the codebase updates in reverse chronological order. We are sharing updates on the releases as well as research projects which are built with PyTorch3D. The changelogs for the releases are available under [`Releases`](https://github.com/facebookresearch/pytorch3d/releases), and the builds can be installed using `conda` as per the instructions in [INSTALL.md](INSTALL.md).

|

||||

|

||||

**[Feb 9th 2021]:** PyTorch3D [v0.4.0](https://github.com/facebookresearch/pytorch3d/releases/tag/v0.4.0) released with support for implicit functions, volume rendering and a [reimplementation of NeRF](https://github.com/facebookresearch/pytorch3d/tree/master/projects/nerf).

|

||||

**[Feb 9th 2021]:** PyTorch3D [v0.4.0](https://github.com/facebookresearch/pytorch3d/releases/tag/v0.4.0) released with support for implicit functions, volume rendering and a [reimplementation of NeRF](https://github.com/facebookresearch/pytorch3d/tree/main/projects/nerf).

|

||||

|

||||

**[November 2nd 2020]:** PyTorch3D [v0.3.0](https://github.com/facebookresearch/pytorch3d/releases/tag/v0.3.0) released, integrating the pulsar backend.

|

||||

|

||||

|

||||

@@ -159,7 +159,7 @@ html_theme_options = {"collapse_navigation": True}

|

||||

def url_resolver(url):

|

||||

if ".html" not in url:

|

||||

url = url.replace("../", "")

|

||||

return "https://github.com/facebookresearch/pytorch3d/blob/master/" + url

|

||||

return "https://github.com/facebookresearch/pytorch3d/blob/main/" + url

|

||||

else:

|

||||

if DEPLOY:

|

||||

return "http://pytorch3d.readthedocs.io/" + url

|

||||

|

||||

@@ -26,7 +26,7 @@ The need for different mesh batch modes is inherent to the way PyTorch operators

|

||||

<img src="assets/meshrcnn.png" alt="meshrcnn" width="700" align="middle" />

|

||||

|

||||

|

||||

[meshes]: https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/structures/meshes.py

|

||||

[graphconv]: https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/ops/graph_conv.py

|

||||

[vert_align]: https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/ops/vert_align.py

|

||||

[meshes]: https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/structures/meshes.py

|

||||

[graphconv]: https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/ops/graph_conv.py

|

||||

[vert_align]: https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/ops/vert_align.py

|

||||

[meshrcnn]: https://github.com/facebookresearch/meshrcnn

|

||||

|

||||

@@ -5,7 +5,7 @@ sidebar_label: Cubify

|

||||

|

||||

# Cubify

|

||||

|

||||

The [cubify operator](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/ops/cubify.py) converts an 3D occupancy grid of shape `BxDxHxW`, where `B` is the batch size, into a mesh instantiated as a [Meshes](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/structures/meshes.py) data structure of `B` elements. The operator replaces every occupied voxel (if its occupancy probability is greater than a user defined threshold) with a cuboid of 12 faces and 8 vertices. Shared vertices are merged, and internal faces are removed resulting in a **watertight** mesh.

|

||||

The [cubify operator](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/ops/cubify.py) converts an 3D occupancy grid of shape `BxDxHxW`, where `B` is the batch size, into a mesh instantiated as a [Meshes](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/structures/meshes.py) data structure of `B` elements. The operator replaces every occupied voxel (if its occupancy probability is greater than a user defined threshold) with a cuboid of 12 faces and 8 vertices. Shared vertices are merged, and internal faces are removed resulting in a **watertight** mesh.

|

||||

|

||||

The operator provides three alignment modes {*topleft*, *corner*, *center*} which define the span of the mesh vertices with respect to the voxel grid. The alignment modes are described in the figure below for a 2D grid.

|

||||

|

||||

|

||||

@@ -9,12 +9,12 @@ sidebar_label: Data loaders

|

||||

|

||||

ShapeNet is a dataset of 3D CAD models. ShapeNetCore is a subset of the ShapeNet dataset and can be downloaded from https://www.shapenet.org/. There are two versions ShapeNetCore: v1 (55 categories) and v2 (57 categories).

|

||||

|

||||

The PyTorch3D [ShapeNetCore data loader](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/datasets/shapenet/shapenet_core.py) inherits from `torch.utils.data.Dataset`. It takes the path where the ShapeNetCore dataset is stored locally and loads models in the dataset. The ShapeNetCore class loads and returns models with their `categories`, `model_ids`, `vertices` and `faces`. The `ShapeNetCore` data loader also has a customized `render` function that renders models by the specified `model_ids (List[int])`, `categories (List[str])` or `indices (List[int])` with PyTorch3D's differentiable renderer.

|

||||

The PyTorch3D [ShapeNetCore data loader](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/datasets/shapenet/shapenet_core.py) inherits from `torch.utils.data.Dataset`. It takes the path where the ShapeNetCore dataset is stored locally and loads models in the dataset. The ShapeNetCore class loads and returns models with their `categories`, `model_ids`, `vertices` and `faces`. The `ShapeNetCore` data loader also has a customized `render` function that renders models by the specified `model_ids (List[int])`, `categories (List[str])` or `indices (List[int])` with PyTorch3D's differentiable renderer.

|

||||

|

||||

The loaded dataset can be passed to `torch.utils.data.DataLoader` with PyTorch3D's customized collate_fn: `collate_batched_meshes` from the `pytorch3d.dataset.utils` module. The `vertices` and `faces` of the models are used to construct a [Meshes](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/structures/meshes.py) object representing the batched meshes. This `Meshes` representation can be easily used with other ops and rendering in PyTorch3D.

|

||||

The loaded dataset can be passed to `torch.utils.data.DataLoader` with PyTorch3D's customized collate_fn: `collate_batched_meshes` from the `pytorch3d.dataset.utils` module. The `vertices` and `faces` of the models are used to construct a [Meshes](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/structures/meshes.py) object representing the batched meshes. This `Meshes` representation can be easily used with other ops and rendering in PyTorch3D.

|

||||

|

||||

### R2N2

|

||||

|

||||

The R2N2 dataset contains 13 categories that are a subset of the ShapeNetCore v.1 dataset. The R2N2 dataset also contains its own 24 renderings of each object and voxelized models. The R2N2 Dataset can be downloaded following the instructions [here](http://3d-r2n2.stanford.edu/).

|

||||

|

||||

The PyTorch3D [R2N2 data loader](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/datasets/r2n2/r2n2.py) is initialized with the paths to the ShapeNet dataset, the R2N2 dataset and the splits file for R2N2. Just like `ShapeNetCore`, it can be passed to `torch.utils.data.DataLoader` with a customized collate_fn: `collate_batched_R2N2` from the `pytorch3d.dataset.r2n2.utils` module. It returns all the data that `ShapeNetCore` returns, and in addition, it returns the R2N2 renderings (24 views for each model) along with the camera calibration matrices and a voxel representation for each model. Similar to `ShapeNetCore`, it has a customized `render` function that supports rendering specified models with the PyTorch3D differentiable renderer. In addition, it supports rendering models with the same orientations as R2N2's original renderings.

|

||||

The PyTorch3D [R2N2 data loader](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/datasets/r2n2/r2n2.py) is initialized with the paths to the ShapeNet dataset, the R2N2 dataset and the splits file for R2N2. Just like `ShapeNetCore`, it can be passed to `torch.utils.data.DataLoader` with a customized collate_fn: `collate_batched_R2N2` from the `pytorch3d.dataset.r2n2.utils` module. It returns all the data that `ShapeNetCore` returns, and in addition, it returns the R2N2 renderings (24 views for each model) along with the camera calibration matrices and a voxel representation for each model. Similar to `ShapeNetCore`, it has a customized `render` function that supports rendering specified models with the PyTorch3D differentiable renderer. In addition, it supports rendering models with the same orientations as R2N2's original renderings.

|

||||

|

||||

@@ -74,7 +74,7 @@ Since v0.3, [pulsar](https://arxiv.org/abs/2004.07484) can be used as a backend

|

||||

|

||||

<img align="center" src="assets/pulsar_bm.png" width="300">

|

||||

|

||||

Pulsar's processing steps are tightly integrated CUDA kernels and do not work with custom `rasterizer` and `compositor` components. We provide two ways to use Pulsar: (1) there is a unified interface to match the PyTorch3D calling convention seamlessly. This is, for example, illustrated in the [point cloud tutorial](https://github.com/facebookresearch/pytorch3d/blob/master/docs/tutorials/render_colored_points.ipynb). (2) There is a direct interface available to the pulsar backend, which exposes the full functionality of the backend (including opacity, which is not yet available in PyTorch3D). Examples showing its use as well as the matching PyTorch3D interface code are available in [this folder](https://github.com/facebookresearch/pytorch3d/tree/master/docs/examples).

|

||||

Pulsar's processing steps are tightly integrated CUDA kernels and do not work with custom `rasterizer` and `compositor` components. We provide two ways to use Pulsar: (1) there is a unified interface to match the PyTorch3D calling convention seamlessly. This is, for example, illustrated in the [point cloud tutorial](https://github.com/facebookresearch/pytorch3d/blob/main/docs/tutorials/render_colored_points.ipynb). (2) There is a direct interface available to the pulsar backend, which exposes the full functionality of the backend (including opacity, which is not yet available in PyTorch3D). Examples showing its use as well as the matching PyTorch3D interface code are available in [this folder](https://github.com/facebookresearch/pytorch3d/tree/master/docs/examples).

|

||||

|

||||

---

|

||||

|

||||

@@ -84,7 +84,7 @@ For mesh texturing we offer several options (in `pytorch3d/renderer/mesh/texturi

|

||||

|

||||

1. **Vertex Textures**: D dimensional textures for each vertex (for example an RGB color) which can be interpolated across the face. This can be represented as an `(N, V, D)` tensor. This is a fairly simple representation though and cannot model complex textures if the mesh faces are large.

|

||||

2. **UV Textures**: vertex UV coordinates and **one** texture map for the whole mesh. For a point on a face with given barycentric coordinates, the face color can be computed by interpolating the vertex uv coordinates and then sampling from the texture map. This representation requires two tensors (UVs: `(N, V, 2), Texture map: `(N, H, W, 3)`), and is limited to only support one texture map per mesh.

|

||||

3. **Face Textures**: In more complex cases such as ShapeNet meshes, there are multiple texture maps per mesh and some faces have texture while other do not. For these cases, a more flexible representation is a texture atlas, where each face is represented as an `(RxR)` texture map where R is the texture resolution. For a given point on the face, the texture value can be sampled from the per face texture map using the barycentric coordinates of the point. This representation requires one tensor of shape `(N, F, R, R, 3)`. This texturing method is inspired by the SoftRasterizer implementation. For more details refer to the [`make_material_atlas`](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/io/mtl_io.py#L123) and [`sample_textures`](https://github.com/facebookresearch/pytorch3d/blob/master/pytorch3d/renderer/mesh/textures.py#L452) functions. **NOTE:**: The `TexturesAtlas` texture sampling is only differentiable with respect to the texture atlas but not differentiable with respect to the barycentric coordinates.

|

||||

3. **Face Textures**: In more complex cases such as ShapeNet meshes, there are multiple texture maps per mesh and some faces have texture while other do not. For these cases, a more flexible representation is a texture atlas, where each face is represented as an `(RxR)` texture map where R is the texture resolution. For a given point on the face, the texture value can be sampled from the per face texture map using the barycentric coordinates of the point. This representation requires one tensor of shape `(N, F, R, R, 3)`. This texturing method is inspired by the SoftRasterizer implementation. For more details refer to the [`make_material_atlas`](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/io/mtl_io.py#L123) and [`sample_textures`](https://github.com/facebookresearch/pytorch3d/blob/main/pytorch3d/renderer/mesh/textures.py#L452) functions. **NOTE:**: The `TexturesAtlas` texture sampling is only differentiable with respect to the texture atlas but not differentiable with respect to the barycentric coordinates.

|

||||

|

||||

|

||||

<img src="assets/texturing.jpg" width="1000">

|

||||

|

||||

@@ -36,10 +36,10 @@

|

||||

"where $d(g_i, g_j)$ is a suitable metric that compares the extrinsics of cameras $g_i$ and $g_j$. \n",

|

||||

"\n",

|

||||

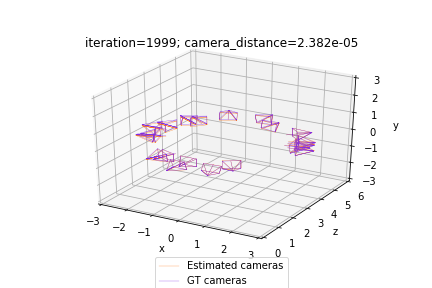

"Visually, the problem can be described as follows. The picture below depicts the situation at the beginning of our optimization. The ground truth cameras are plotted in purple while the randomly initialized estimated cameras are plotted in orange:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Our optimization seeks to align the estimated (orange) cameras with the ground truth (purple) cameras, by minimizing the discrepancies between pairs of relative cameras. Thus, the solution to the problem should look as follows:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"In practice, the camera extrinsics $g_{ij}$ and $g_i$ are represented using objects from the `SfMPerspectiveCameras` class initialized with the corresponding rotation and translation matrices `R_absolute` and `T_absolute` that define the extrinsic parameters $g = (R, T); R \\in SO(3); T \\in \\mathbb{R}^3$. In order to ensure that `R_absolute` is a valid rotation matrix, we represent it using an exponential map (implemented with `so3_exp_map`) of the axis-angle representation of the rotation `log_R_absolute`.\n",

|

||||

"\n",

|

||||

@@ -167,11 +167,11 @@

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/camera_visualization.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/camera_visualization.py\n",

|

||||

"from camera_visualization import plot_camera_scene\n",

|

||||

"\n",

|

||||

"!mkdir data\n",

|

||||

"!wget -P data https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/data/camera_graph.pth"

|

||||

"!wget -P data https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/data/camera_graph.pth"

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

@@ -112,7 +112,7 @@

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"from plot_image_grid import image_grid"

|

||||

]

|

||||

},

|

||||

|

||||

@@ -126,8 +126,8 @@

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/generate_cow_renders.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/generate_cow_renders.py\n",

|

||||

"from plot_image_grid import image_grid\n",

|

||||

"from generate_cow_renders import generate_cow_renders"

|

||||

]

|

||||

|

||||

@@ -155,7 +155,7 @@

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"from plot_image_grid import image_grid"

|

||||

]

|

||||

},

|

||||

|

||||

@@ -106,8 +106,8 @@

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/generate_cow_renders.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/generate_cow_renders.py\n",

|

||||

"from plot_image_grid import image_grid\n",

|

||||

"from generate_cow_renders import generate_cow_renders"

|

||||

]

|

||||

|

||||

@@ -154,7 +154,7 @@

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/plot_image_grid.py\n",

|

||||

"from plot_image_grid import image_grid"

|

||||

]

|

||||

},

|

||||

|

||||

@@ -3,12 +3,12 @@ Neural Radiance Fields in PyTorch3D

|

||||

|

||||

This project implements the Neural Radiance Fields (NeRF) from [1].

|

||||

|

||||

<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/master/.github/nerf_project_logo.gif" width="600" height="338"/>

|

||||

<img src="https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/.github/nerf_project_logo.gif" width="600" height="338"/>

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

1) [Install PyTorch3D](https://github.com/facebookresearch/pytorch3d/blob/master/INSTALL.md)

|

||||

1) [Install PyTorch3D](https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md)

|

||||

- Note that this repo requires `PyTorch` version `>= v1.6.0` due to dependency on `torch.searchsorted`.

|

||||

|

||||

2) Install other dependencies:

|

||||

|

||||

@@ -135,7 +135,7 @@ loss_chamfer, _ = chamfer_distance(sample_sphere, sample_test)

|

||||

<Container>

|

||||

<ol>

|

||||

<li>

|

||||

<strong>Install PyTorch3D </strong> (following the instructions <a href="https://github.com/facebookresearch/pytorch3d/blob/master/INSTALL.md">here</a>)

|

||||

<strong>Install PyTorch3D </strong> (following the instructions <a href="https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md">here</a>)

|

||||

</li>

|

||||

<li>

|

||||

<strong>Try a few 3D operators </strong>

|

||||

|

||||

@@ -19,7 +19,7 @@ class Users extends React.Component {

|

||||

return null;

|

||||

}

|

||||

|

||||

const editUrl = `${siteConfig.repoUrl}/edit/master/website/siteConfig.js`;

|

||||

const editUrl = `${siteConfig.repoUrl}/edit/main/website/siteConfig.js`;

|

||||

const showcase = siteConfig.users.map(user => (

|

||||

<a href={user.infoLink} key={user.infoLink}>

|

||||

<img src={user.image} alt={user.caption} title={user.caption} />

|

||||

|

||||

Reference in New Issue

Block a user