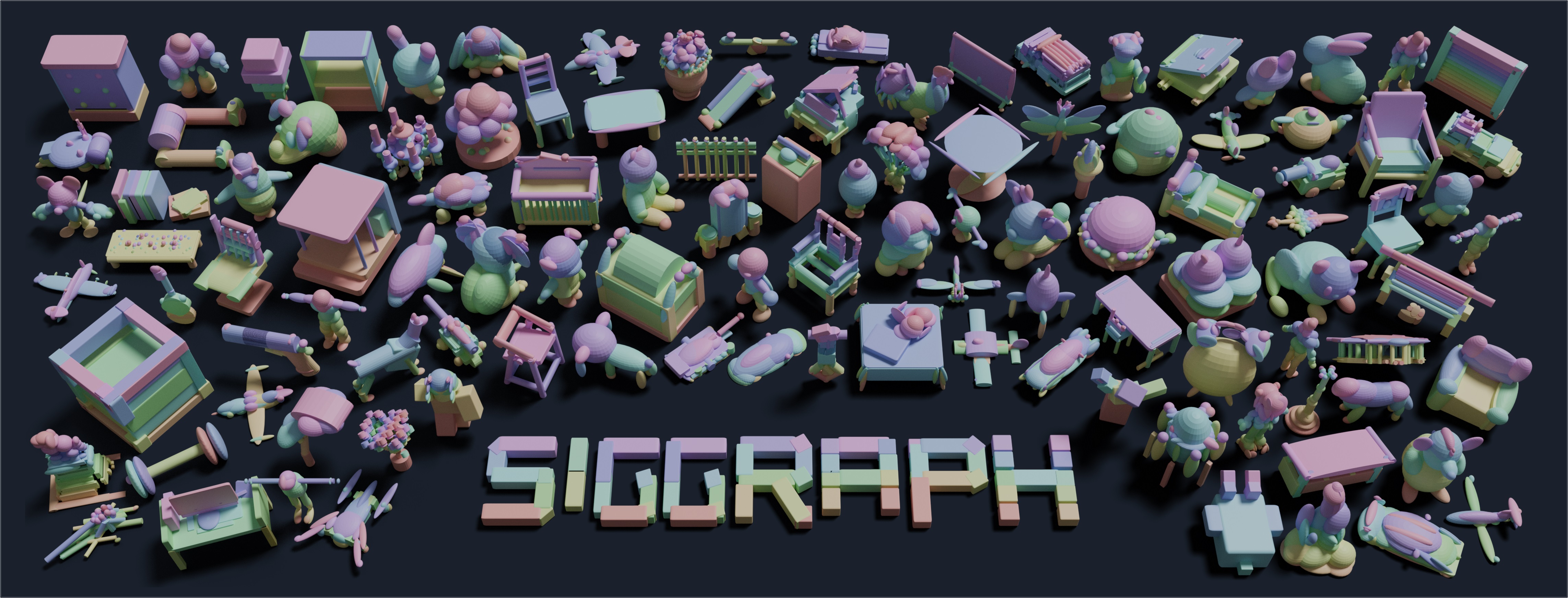

PrimitiveAnything: Human-Crafted 3D Primitive Assembly Generation with Auto-Regressive Transformer

Jingwen Ye1*, Yuze He1,2*, Yanning Zhou1†, Yiqin Zhu1, Kaiwen Xiao1, Yong-Jin Liu2†, Wei Yang1, Xiao Han1†

1Tencent AIPD 2Tsinghua University

*Equal Contributions †Corresponding Authors

🔥 Updates

[2025/05/07] test dataset, code, pretrained checkpoints and Gradio demo are released!

🔍 Table of Contents

⚙️ Deployment

Set up a Python environment and install the required packages:

conda create -n primitiveanything python=3.9 -y

conda activate primitiveanything

# Install torch, torchvision based on your machine configuration

pip install torch==2.1.0 torchvision==0.16.0 --index-url https://download.pytorch.org/whl/cu118

# Install other dependencies

pip install -r requirements.txt

Then download data and pretrained weights:

-

Our Model Weights: Download from our 🤗 Hugging Face repository (download here) and place them in

./ckpt/. -

Michelangelo’s Point Cloud Encoder: Download weights from Michelangelo’s Hugging Face repo and save them to

./ckpt/. -

Demo and test data:

Download from this Google Drive link, then decompress the files into

./data/; or you can download from our 🤗 Hugging Face datasets library:

from huggingface_hub import hf_hub_download, list_repo_files

# Get list of all files in repo

files = list_repo_files(repo_id="hyz317/PrimitiveAnything", repo_type="dataset")

# Download each file

for file in files:

file_path = hf_hub_download(

repo_id="hyz317/PrimitiveAnything",

filename=file,

repo_type="dataset",

local_dir='./data'

)

After downloading and organizing the files, your project directory should look like this:

- data/

├── basic_shapes_norm/

├── basic_shapes_norm_pc10000/

├── demo_glb/ # Demo files in GLB format

└── test_pc/ # Test point cloud data

- ckpt/

├── mesh-transformer.ckpt.60.pt # Our model checkpoint

└── shapevae-256.ckpt # Michelangelo ShapeVAE checkpoint

🖥️ Run PrimitiveAnything

Demo

python demo.py --input ./data/demo_glb --log_path ./results/demo

Notes:

--inputaccepts either:- Any standard 3D file (GLB, OBJ, etc.)

- A directory containing multiple 3D files

- For optimal results with fine structures, we automatically apply marching cubes and dilation operations (which differs from testing and evaluation). This prevents quality degradation in thin areas.

Testing and Evaluation

# Autoregressive generation

python infer.py

# Sample point clouds from predictions

python sample.py

# Calculate evaluation metrics

python eval.py

📝 Citation

If you find our work useful, please kindly cite:

@article{ye2025primitiveanything,

title={PrimitiveAnything: Human-Crafted 3D Primitive Assembly Generation with Auto-Regressive Transformer},

author={Ye, Jingwen and He, Yuze and Zhou, Yanning and Zhu, Yiqin and Xiao, Kaiwen and Liu, Yong-Jin and Yang, Wei and Han, Xiao},

journal={arXiv preprint arXiv:2505.04622},

year={2025}

}