mirror of

https://github.com/facebookresearch/pytorch3d.git

synced 2026-02-27 16:56:01 +08:00

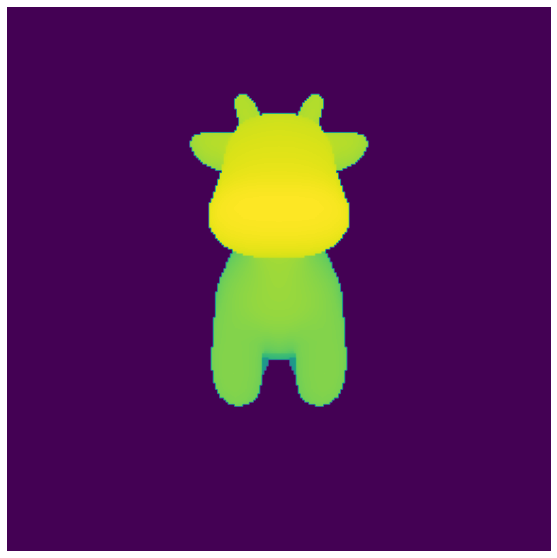

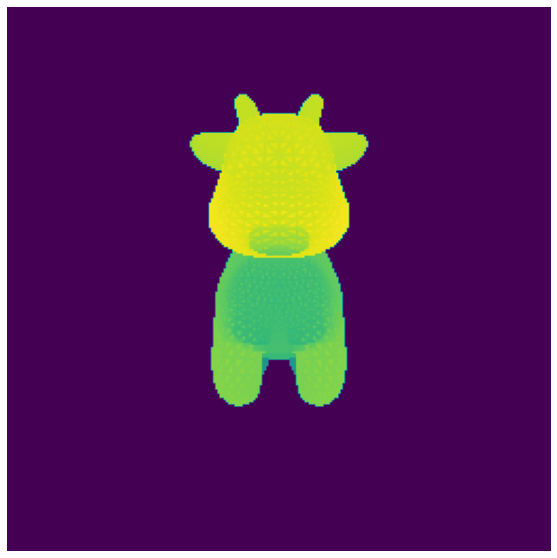

Summary: X-link: https://github.com/fairinternal/pytorch3d/pull/36 This adds two shaders for rendering depth maps for meshes. This is useful for structure from motion applications that learn depths based off of camera pair disparities. There's two shaders, one hard which just returns the distances and then a second that does a cumsum on the probabilities of the points with a weighted sum. Areas that don't have any z faces are set to the zfar distance. Output from this renderer is `[N, H, W]` since it's just depth no need for channels. I haven't tested this in an ML model yet just in a notebook. hard:  soft:  Pull Request resolved: https://github.com/facebookresearch/pytorch3d/pull/1208 Reviewed By: bottler Differential Revision: D36682194 Pulled By: d4l3k fbshipit-source-id: 5d4e10c6fb0fff5427be4ddd3bd76305a7ccc1e2