mirror of

https://github.com/facebookresearch/pytorch3d.git

synced 2025-08-02 03:42:50 +08:00

Summary:

### Generalise tutorials' pip searching:

## Required Information:

This diff contains changes to several PyTorch3D tutorials.

**Purpose of this diff:**

Replace the current installation code with a more streamlined approach that tries to install the wheel first and falls back to installing from source if the wheel is not found.

**Why this diff is required:**

This diff makes it easier to cope with new PyTorch releases and reduce the need for manual intervention, as the current process involves checking the version of PyTorch in Colab and building a new wheel if it doesn't match the expected version, which generates additional work each time there is a a new PyTorch version in Colab.

**Changes:**

Before:

```

if torch.__version__.startswith("2.1.") and sys.platform.startswith("linux"):

# We try to install PyTorch3D via a released wheel.

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

version_str="".join([

f"py3{sys.version_info.minor}_cu",

torch.version.cuda.replace(".",""),

f"_pyt{pyt_version_str}"

])

!pip install fvcore iopath

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

else:

# We try to install PyTorch3D from source.

!pip install 'git+https://github.com/facebookresearch/pytorch3d.git@stable'

```

After:

```

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

version_str="".join([

f"py3{sys.version_info.minor}_cu",

torch.version.cuda.replace(".",""),

f"_pyt{pyt_version_str}"

])

!pip install fvcore iopath

if sys.platform.startswith("linux"):

# We try to install PyTorch3D via a released wheel.

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

pip_list = !pip freeze

need_pytorch3d = not any(i.startswith("pytorch3d==") for i in pip_list)

if need_pytorch3d:

# We try to install PyTorch3D from source.

!pip install 'git+https://github.com/facebookresearch/pytorch3d.git@stable'

```

Reviewed By: bottler

Differential Revision: D55431832

fbshipit-source-id: a8de9162470698320241ae8401427dcb1ce17c37

473 lines

16 KiB

Plaintext

473 lines

16 KiB

Plaintext

{

|

|

"cells": [

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {},

|

|

"colab_type": "code",

|

|

"id": "bD6DUkgzmFoR"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"# Copyright (c) Meta Platforms, Inc. and affiliates. All rights reserved."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "Jj6j6__ZmFoW"

|

|

},

|

|

"source": [

|

|

"# Absolute camera orientation given set of relative camera pairs\n",

|

|

"\n",

|

|

"This tutorial showcases the `cameras`, `transforms` and `so3` API.\n",

|

|

"\n",

|

|

"The problem we deal with is defined as follows:\n",

|

|

"\n",

|

|

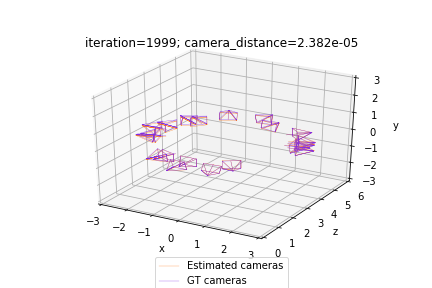

"Given an optical system of $N$ cameras with extrinsics $\\{g_1, ..., g_N | g_i \\in SE(3)\\}$, and a set of relative camera positions $\\{g_{ij} | g_{ij}\\in SE(3)\\}$ that map between coordinate frames of randomly selected pairs of cameras $(i, j)$, we search for the absolute extrinsic parameters $\\{g_1, ..., g_N\\}$ that are consistent with the relative camera motions.\n",

|

|

"\n",

|

|

"More formally:\n",

|

|

"$$\n",

|

|

"g_1, ..., g_N = \n",

|

|

"{\\arg \\min}_{g_1, ..., g_N} \\sum_{g_{ij}} d(g_{ij}, g_i^{-1} g_j),\n",

|

|

"$$,\n",

|

|

"where $d(g_i, g_j)$ is a suitable metric that compares the extrinsics of cameras $g_i$ and $g_j$. \n",

|

|

"\n",

|

|

"Visually, the problem can be described as follows. The picture below depicts the situation at the beginning of our optimization. The ground truth cameras are plotted in purple while the randomly initialized estimated cameras are plotted in orange:\n",

|

|

"\n",

|

|

"\n",

|

|

"Our optimization seeks to align the estimated (orange) cameras with the ground truth (purple) cameras, by minimizing the discrepancies between pairs of relative cameras. Thus, the solution to the problem should look as follows:\n",

|

|

"\n",

|

|

"\n",

|

|

"In practice, the camera extrinsics $g_{ij}$ and $g_i$ are represented using objects from the `SfMPerspectiveCameras` class initialized with the corresponding rotation and translation matrices `R_absolute` and `T_absolute` that define the extrinsic parameters $g = (R, T); R \\in SO(3); T \\in \\mathbb{R}^3$. In order to ensure that `R_absolute` is a valid rotation matrix, we represent it using an exponential map (implemented with `so3_exp_map`) of the axis-angle representation of the rotation `log_R_absolute`.\n",

|

|

"\n",

|

|

"Note that the solution to this problem could only be recovered up to an unknown global rigid transformation $g_{glob} \\in SE(3)$. Thus, for simplicity, we assume knowledge of the absolute extrinsics of the first camera $g_0$. We set $g_0$ as a trivial camera $g_0 = (I, \\vec{0})$.\n"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "nAQY4EnHmFoX"

|

|

},

|

|

"source": [

|

|

"## 0. Install and Import Modules"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "WAHR1LMJmP-h"

|

|

},

|

|

"source": [

|

|

"Ensure `torch` and `torchvision` are installed. If `pytorch3d` is not installed, install it using the following cell:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {

|

|

"base_uri": "https://localhost:8080/",

|

|

"height": 717

|

|

},

|

|

"colab_type": "code",

|

|

"id": "uo7a3gdImMZx",

|

|

"outputId": "bf07fd03-dec0-4294-b2ba-9cf5b7333672"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"import os\n",

|

|

"import sys\n",

|

|

"import torch\n",

|

|

"import subprocess\n",

|

|

"need_pytorch3d=False\n",

|

|

"try:\n",

|

|

" import pytorch3d\n",

|

|

"except ModuleNotFoundError:\n",

|

|

" need_pytorch3d=True\n",

|

|

"if need_pytorch3d:\n",

|

|

" pyt_version_str=torch.__version__.split(\"+\")[0].replace(\".\", \"\")\n",

|

|

" version_str=\"\".join([\n",

|

|

" f\"py3{sys.version_info.minor}_cu\",\n",

|

|

" torch.version.cuda.replace(\".\",\"\"),\n",

|

|

" f\"_pyt{pyt_version_str}\"\n",

|

|

" ])\n",

|

|

" !pip install fvcore iopath\n",

|

|

" if sys.platform.startswith(\"linux\"):\n",

|

|

" print(\"Trying to install wheel for PyTorch3D\")\n",

|

|

" !pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html\n",

|

|

" pip_list = !pip freeze\n",

|

|

" need_pytorch3d = not any(i.startswith(\"pytorch3d==\") for i in pip_list)\n",

|

|

" if need_pytorch3d:\n",

|

|

" print(f\"failed to find/install wheel for {version_str}\")\n",

|

|

"if need_pytorch3d:\n",

|

|

" print(\"Installing PyTorch3D from source\")\n",

|

|

" !pip install ninja\n",

|

|

" !pip install 'git+https://github.com/facebookresearch/pytorch3d.git@stable'"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {

|

|

"base_uri": "https://localhost:8080/",

|

|

"height": 34

|

|

},

|

|

"colab_type": "code",

|

|

"id": "UgLa7XQimFoY",

|

|

"outputId": "16404f4f-4c7c-4f3f-b96a-e9a876def4c1"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"# imports\n",

|

|

"import torch\n",

|

|

"from pytorch3d.transforms.so3 import (\n",

|

|

" so3_exp_map,\n",

|

|

" so3_relative_angle,\n",

|

|

")\n",

|

|

"from pytorch3d.renderer.cameras import (\n",

|

|

" SfMPerspectiveCameras,\n",

|

|

")\n",

|

|

"\n",

|

|

"# add path for demo utils\n",

|

|

"import sys\n",

|

|

"import os\n",

|

|

"sys.path.append(os.path.abspath(''))\n",

|

|

"\n",

|

|

"# set for reproducibility\n",

|

|

"torch.manual_seed(42)\n",

|

|

"if torch.cuda.is_available():\n",

|

|

" device = torch.device(\"cuda:0\")\n",

|

|

"else:\n",

|

|

" device = torch.device(\"cpu\")\n",

|

|

" print(\"WARNING: CPU only, this will be slow!\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "u4emnRuzmpRB"

|

|

},

|

|

"source": [

|

|

"If using **Google Colab**, fetch the utils file for plotting the camera scene, and the ground truth camera positions:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {

|

|

"base_uri": "https://localhost:8080/",

|

|

"height": 391

|

|

},

|

|

"colab_type": "code",

|

|

"id": "kOvMPYJdmd15",

|

|

"outputId": "9f2a601b-891b-4cb6-d8f6-a444f7829132"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/camera_visualization.py\n",

|

|

"from camera_visualization import plot_camera_scene\n",

|

|

"\n",

|

|

"!mkdir data\n",

|

|

"!wget -P data https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/data/camera_graph.pth"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "L9WD5vaimw3K"

|

|

},

|

|

"source": [

|

|

"OR if running **locally** uncomment and run the following cell:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {},

|

|

"colab_type": "code",

|

|

"id": "ucGlQj5EmmJ5"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"# from utils import plot_camera_scene"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "7WeEi7IgmFoc"

|

|

},

|

|

"source": [

|

|

"## 1. Set up Cameras and load ground truth positions"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {},

|

|

"colab_type": "code",

|

|

"id": "D_Wm0zikmFod"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"# load the SE3 graph of relative/absolute camera positions\n",

|

|

"camera_graph_file = './data/camera_graph.pth'\n",

|

|

"(R_absolute_gt, T_absolute_gt), \\\n",

|

|

" (R_relative, T_relative), \\\n",

|

|

" relative_edges = \\\n",

|

|

" torch.load(camera_graph_file)\n",

|

|

"\n",

|

|

"# create the relative cameras\n",

|

|

"cameras_relative = SfMPerspectiveCameras(\n",

|

|

" R = R_relative.to(device),\n",

|

|

" T = T_relative.to(device),\n",

|

|

" device = device,\n",

|

|

")\n",

|

|

"\n",

|

|

"# create the absolute ground truth cameras\n",

|

|

"cameras_absolute_gt = SfMPerspectiveCameras(\n",

|

|

" R = R_absolute_gt.to(device),\n",

|

|

" T = T_absolute_gt.to(device),\n",

|

|

" device = device,\n",

|

|

")\n",

|

|

"\n",

|

|

"# the number of absolute camera positions\n",

|

|

"N = R_absolute_gt.shape[0]"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "-f-RNlGemFog"

|

|

},

|

|

"source": [

|

|

"## 2. Define optimization functions\n",

|

|

"\n",

|

|

"### Relative cameras and camera distance\n",

|

|

"We now define two functions crucial for the optimization.\n",

|

|

"\n",

|

|

"**`calc_camera_distance`** compares a pair of cameras. This function is important as it defines the loss that we are minimizing. The method utilizes the `so3_relative_angle` function from the SO3 API.\n",

|

|

"\n",

|

|

"**`get_relative_camera`** computes the parameters of a relative camera that maps between a pair of absolute cameras. Here we utilize the `compose` and `inverse` class methods from the PyTorch3D Transforms API."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {},

|

|

"colab_type": "code",

|

|

"id": "xzzk88RHmFoh"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"def calc_camera_distance(cam_1, cam_2):\n",

|

|

" \"\"\"\n",

|

|

" Calculates the divergence of a batch of pairs of cameras cam_1, cam_2.\n",

|

|

" The distance is composed of the cosine of the relative angle between \n",

|

|

" the rotation components of the camera extrinsics and the l2 distance\n",

|

|

" between the translation vectors.\n",

|

|

" \"\"\"\n",

|

|

" # rotation distance\n",

|

|

" R_distance = (1.-so3_relative_angle(cam_1.R, cam_2.R, cos_angle=True)).mean()\n",

|

|

" # translation distance\n",

|

|

" T_distance = ((cam_1.T - cam_2.T)**2).sum(1).mean()\n",

|

|

" # the final distance is the sum\n",

|

|

" return R_distance + T_distance\n",

|

|

"\n",

|

|

"def get_relative_camera(cams, edges):\n",

|

|

" \"\"\"\n",

|

|

" For each pair of indices (i,j) in \"edges\" generate a camera\n",

|

|

" that maps from the coordinates of the camera cams[i] to \n",

|

|

" the coordinates of the camera cams[j]\n",

|

|

" \"\"\"\n",

|

|

"\n",

|

|

" # first generate the world-to-view Transform3d objects of each \n",

|

|

" # camera pair (i, j) according to the edges argument\n",

|

|

" trans_i, trans_j = [\n",

|

|

" SfMPerspectiveCameras(\n",

|

|

" R = cams.R[edges[:, i]],\n",

|

|

" T = cams.T[edges[:, i]],\n",

|

|

" device = device,\n",

|

|

" ).get_world_to_view_transform()\n",

|

|

" for i in (0, 1)\n",

|

|

" ]\n",

|

|

" \n",

|

|

" # compose the relative transformation as g_i^{-1} g_j\n",

|

|

" trans_rel = trans_i.inverse().compose(trans_j)\n",

|

|

" \n",

|

|

" # generate a camera from the relative transform\n",

|

|

" matrix_rel = trans_rel.get_matrix()\n",

|

|

" cams_relative = SfMPerspectiveCameras(\n",

|

|

" R = matrix_rel[:, :3, :3],\n",

|

|

" T = matrix_rel[:, 3, :3],\n",

|

|

" device = device,\n",

|

|

" )\n",

|

|

" return cams_relative"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "Ys9J7MbMmFol"

|

|

},

|

|

"source": [

|

|

"## 3. Optimization\n",

|

|

"Finally, we start the optimization of the absolute cameras.\n",

|

|

"\n",

|

|

"We use SGD with momentum and optimize over `log_R_absolute` and `T_absolute`. \n",

|

|

"\n",

|

|

"As mentioned earlier, `log_R_absolute` is the axis angle representation of the rotation part of our absolute cameras. We can obtain the 3x3 rotation matrix `R_absolute` that corresponds to `log_R_absolute` with:\n",

|

|

"\n",

|

|

"`R_absolute = so3_exp_map(log_R_absolute)`\n"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": null,

|

|

"metadata": {

|

|

"colab": {

|

|

"base_uri": "https://localhost:8080/",

|

|

"height": 1000

|

|

},

|

|

"colab_type": "code",

|

|

"id": "iOK_DUzVmFom",

|

|

"outputId": "4195bc36-7b84-4070-dcc1-d3abb1e12031"

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"# initialize the absolute log-rotations/translations with random entries\n",

|

|

"log_R_absolute_init = torch.randn(N, 3, dtype=torch.float32, device=device)\n",

|

|

"T_absolute_init = torch.randn(N, 3, dtype=torch.float32, device=device)\n",

|

|

"\n",

|

|

"# furthermore, we know that the first camera is a trivial one \n",

|

|

"# (see the description above)\n",

|

|

"log_R_absolute_init[0, :] = 0.\n",

|

|

"T_absolute_init[0, :] = 0.\n",

|

|

"\n",

|

|

"# instantiate a copy of the initialization of log_R / T\n",

|

|

"log_R_absolute = log_R_absolute_init.clone().detach()\n",

|

|

"log_R_absolute.requires_grad = True\n",

|

|

"T_absolute = T_absolute_init.clone().detach()\n",

|

|

"T_absolute.requires_grad = True\n",

|

|

"\n",

|

|

"# the mask the specifies which cameras are going to be optimized\n",

|

|

"# (since we know the first camera is already correct, \n",

|

|

"# we only optimize over the 2nd-to-last cameras)\n",

|

|

"camera_mask = torch.ones(N, 1, dtype=torch.float32, device=device)\n",

|

|

"camera_mask[0] = 0.\n",

|

|

"\n",

|

|

"# init the optimizer\n",

|

|

"optimizer = torch.optim.SGD([log_R_absolute, T_absolute], lr=.1, momentum=0.9)\n",

|

|

"\n",

|

|

"# run the optimization\n",

|

|

"n_iter = 2000 # fix the number of iterations\n",

|

|

"for it in range(n_iter):\n",

|

|

" # re-init the optimizer gradients\n",

|

|

" optimizer.zero_grad()\n",

|

|

"\n",

|

|

" # compute the absolute camera rotations as \n",

|

|

" # an exponential map of the logarithms (=axis-angles)\n",

|

|

" # of the absolute rotations\n",

|

|

" R_absolute = so3_exp_map(log_R_absolute * camera_mask)\n",

|

|

"\n",

|

|

" # get the current absolute cameras\n",

|

|

" cameras_absolute = SfMPerspectiveCameras(\n",

|

|

" R = R_absolute,\n",

|

|

" T = T_absolute * camera_mask,\n",

|

|

" device = device,\n",

|

|

" )\n",

|

|

"\n",

|

|

" # compute the relative cameras as a composition of the absolute cameras\n",

|

|

" cameras_relative_composed = \\\n",

|

|

" get_relative_camera(cameras_absolute, relative_edges)\n",

|

|

"\n",

|

|

" # compare the composed cameras with the ground truth relative cameras\n",

|

|

" # camera_distance corresponds to $d$ from the description\n",

|

|

" camera_distance = \\\n",

|

|

" calc_camera_distance(cameras_relative_composed, cameras_relative)\n",

|

|

"\n",

|

|

" # our loss function is the camera_distance\n",

|

|

" camera_distance.backward()\n",

|

|

" \n",

|

|

" # apply the gradients\n",

|

|

" optimizer.step()\n",

|

|

"\n",

|

|

" # plot and print status message\n",

|

|

" if it % 200==0 or it==n_iter-1:\n",

|

|

" status = 'iteration=%3d; camera_distance=%1.3e' % (it, camera_distance)\n",

|

|

" plot_camera_scene(cameras_absolute, cameras_absolute_gt, status)\n",

|

|

"\n",

|

|

"print('Optimization finished.')\n"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"metadata": {

|

|

"colab_type": "text",

|

|

"id": "vncLMvxWnhmO"

|

|

},

|

|

"source": [

|

|

"## 4. Conclusion \n",

|

|

"\n",

|

|

"In this tutorial we learnt how to initialize a batch of SfM Cameras, set up loss functions for bundle adjustment, and run an optimization loop. "

|

|

]

|

|

}

|

|

],

|

|

"metadata": {

|

|

"accelerator": "GPU",

|

|

"bento_stylesheets": {

|

|

"bento/extensions/flow/main.css": true,

|

|

"bento/extensions/kernel_selector/main.css": true,

|

|

"bento/extensions/kernel_ui/main.css": true,

|

|

"bento/extensions/new_kernel/main.css": true,

|

|

"bento/extensions/system_usage/main.css": true,

|

|

"bento/extensions/theme/main.css": true

|

|

},

|

|

"colab": {

|

|

"name": "bundle_adjustment.ipynb",

|

|

"provenance": [],

|

|

"toc_visible": true

|

|

},

|

|

"file_extension": ".py",

|

|

"kernelspec": {

|

|

"display_name": "Python 3",

|

|

"language": "python",

|

|

"name": "python3"

|

|

},

|

|

"language_info": {

|

|

"codemirror_mode": {

|

|

"name": "ipython",

|

|

"version": 3

|

|

},

|

|

"file_extension": ".py",

|

|

"mimetype": "text/x-python",

|

|

"name": "python",

|

|

"nbconvert_exporter": "python",

|

|

"pygments_lexer": "ipython3",

|

|

"version": "3.7.5+"

|

|

},

|

|

"mimetype": "text/x-python",

|

|

"name": "python",

|

|

"npconvert_exporter": "python",

|

|

"pygments_lexer": "ipython3",

|

|

"version": 3

|

|

},

|

|

"nbformat": 4,

|

|

"nbformat_minor": 1

|

|

}

|